At DeNexus, we talk a lot about Cyber Risk Aggregation. This obsession may not be good for our social lives, but it is good for our customers in the insurance and (re)insurance industries reconciling the new elevated cyber risks of their clients, and the costs associated with them.

What is "Cyber Risk Aggregation" Anyway?

Overall, Risk Aggregation relates to analyzing the interaction between individual risks to in order to gather a bigger, more accurate picture of risk impacts. Today, managing cyber risk aggregation represents one of the top concerns for the insurance industry because 1) cyber risks are on the rise and 2) the insurance industry lacks the quantification mechanisms to accurately quantify that risk.

For example, when you consider that a single cyber incident at a large industrial facility can affect their insurer by spreading into other parts of their portfolio, the business implications of achieving an accurate cyber risk aggregation methodology are enormous. This can have reverberating positive effects on the insurers and (re)insurance ability to efficiently allocate capital and to price policies.

So why has more attention been placed on Cyber Risk Aggregation?

First of all, Cyber risk aggregation is a relatively new challenge for insurance markets, and therefore it is challenging to evaluate the accumulation of this type of risk.

In other types of risk, such as natural catastrophe risk, insurers are much more experienced and have larger, less dynamic and readily available data-sets to conduct accurate risk assesment and risk aggregation calculations. For example, a hurricane or other natural disaster may trigger a surge in claims in the physical world, but these claims are limited to a particular geographic area. Additionally, long and detailed data sources exist on hurricane behavior, location and damages. This is not the case when it comes to cyber risk.

In cyber risk, a cyberattack can result in claims that erupt across the globe, and financial loss events can be very nuanced and complex. When we take a look at the WannaCry ransomware attack for example, it infected over 200,000 computer systems in 150 countries, severely disrupting organizations like FedEx and the UK's National Health Service, all in one fell swoop. No accurate loss aggregation model existed for insurers or (re)insurers for such an event. The industry demands one.

In addition to the topic of cyber risk aggregation in general is Systemic Risk, which is defined by the notion that one incident could cause a cascading failure that triggers the collapse of an entire system. An example for this would be a cyberattack that takes down the power grid, and thus impacts sectors from transportation to communications and healthcare. This is a real potential scenario, and it is directly inherent in cyber risk today.

Risk Aggregation for Industrial Enterprises (Risk Owners)

Cyber risk aggregation arises internally and externally to the organization and represents a multiplier effect to the scale and scope of a cyber incident. As a result, the risk to individual organizations is that they can suffer large financial losses (catastrophic losses).

For industrial enterprises, the risk aggregation implies estimating the loss distribution considering all its facilities, OT (Operational Technology), IT (Information Technology) and business platforms. It should also consider outside services, partners, and vendors.

The facility manager, and risk stakeholders in general, will use the cyber risk estimation to manage their exposures and ensure stability, like having the proper controls in place. Additionally, understanding their inherent cyber risk within this domain properly aligns cybersecurity initiatives, investments and budgets with the bottom line and board room of the organization at hand. As cyber risk becomes more central to the overall risk management conversation, this is critically important at the C-level of industrial organizations, and their shareholders.

Aggregation for Cyber Risk Insurers and Underwriters

The risk to cyber insurers and (re)insurers is that they can suffer losses from the same incident under multiple policies without having factored that risk into the policy they are underwriting. Inevitably, this results on both cyber insurers and (re)insurers having the incorrect capacity to support their clients and/or portfolio.

Simply put, cyber insurers need to quantify and measure scenarios of widespread impact, defined by business interruption, data-breach losses, etc., across thousands of businesses all at once.

The cyber insurance market is growing steadily, but it is still lacking mathematical models that fully support their activity. As stated by Rory Egan, Senior Cyber Actuary at Munich Re: “This lack of trust in probabilistic aggregation models is evidenced by the lack of a meaningfully sized retro or capital market for cyber catastrophe risk, so far".

The lack of historical profile and actuarial data makes it challenging to undertake advanced modeling or base underwriting decisions on meaningful, evidence-based analysis for individual risk analysis. This fact becomes worse when talking about risk aggregation. Some of the challenges that need to be faced are:

- Identification of all sources of aggregation

- Non-obvious and evolving aggregation paths

- Potentially limitless number of different aggregation scenarios

- Highly dynamic area and dealing with much uncertainty

Traditional Approach

From the actuarial perspective, some approaches are:

- Aggregation of cyber risk across business lines

- Aggregation in cyber coverage related to vendor risk

- Market share approach

The DeNexus Approach

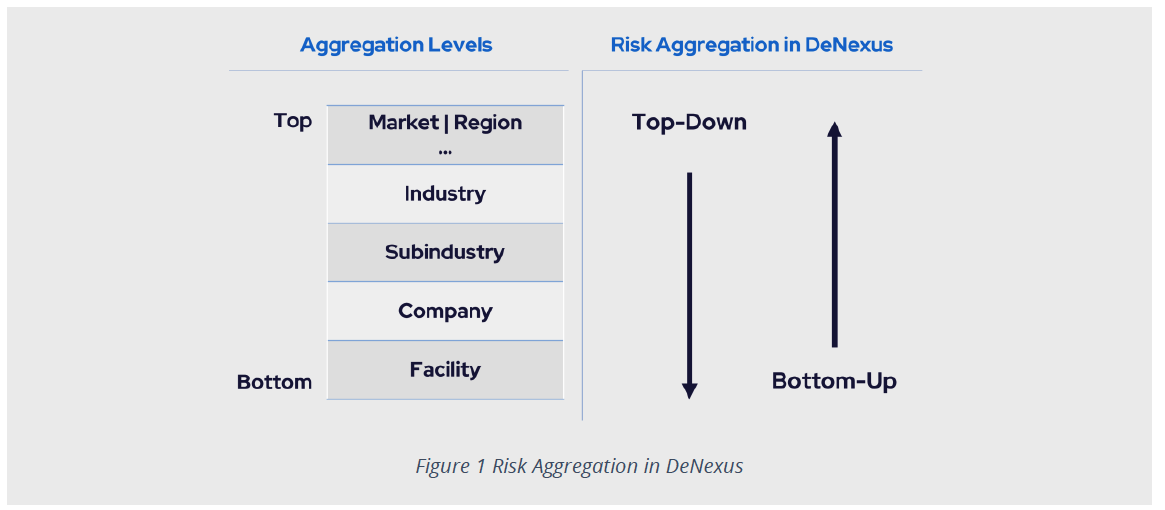

To provide the most reliable risk estimations, we work with a dual approach: bottom-up and top-down views of the risk. In other words, we are working with a dual vision: (1) as the industrials, owners of facilities, that need to understand and mitigate its risk at the facility and owner’s portfolio levels to make better-informed decisions on reduce loss exposure (not mitigated risks may be not insurable risks); and (2) as the insurers, that underwrites the risk and need to diversify its portfolio.

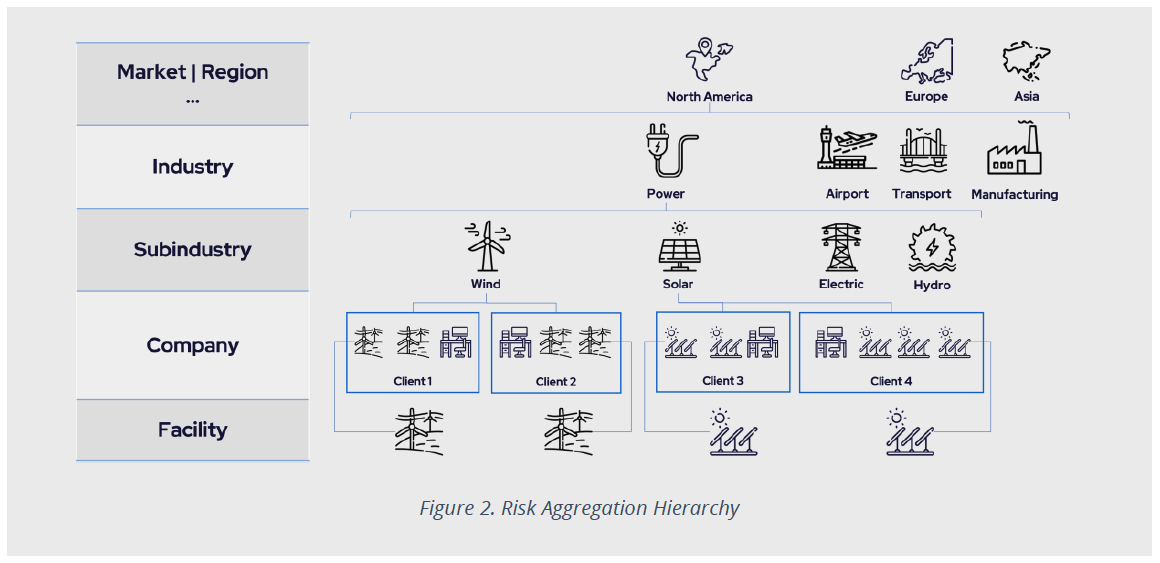

Let us consider the schema exhibit in Figure 2 below to understand both approaches and they converge. For OT environments, the most granular level of risk analysis is at the facility level. The approach used to quantify individual (facility) risk can be used for similar facilities. Then, to quantify the risk to the owner of those facilities, interconnections between facilities and interconnection with the corporate IT network must be considered.

Risk quantification for companies in the same sub-industry, say wind power generation, will follow a similar approach; however, due to differences in industrial processes, risk quantification for companies in another sub-industry, say oil & gas, must be adapted accordingly.

The new elements that appear at each hierarchical level can be evaluated and incorporated into the risk aggregation once all the previous ones are already part of the quantification system. This analysis can be performed up to the top level where different lines of business are analyzed and where portfolio diversification lies.

Bottom-up

The bottom-up approach goes from individual to portfolio risk, and it is specially relevant for large industrial companies due to the large unit risk potential. The DeRISK platform has the best risk quantification engine that provides an accurate estimate of the loss distribution for a given facility. Having this, we are expanding the system to both incorporate the OT & IT corporate network convergence and the risk accumulation from all the similar facilities of a given client.

Some of the advantages of the bottom-up approach are:

- Based on a deep understanding of the individual sources of risk

- Uses granular data to quantify the risk

- Provides flexibility to adapt to new scenarios/new data

- Provides reliable results because industry experts can leverage their opinions with empirical evidence-based data

to complete four stages:

- Compute the loss distribution considering all the (similar) facilities for a client in a specific subindustry

- Aggregate the risk for Clients in the same subindustry

- Aggregate the risk for Clients in the same industry vertical

- Aggregate the risk for Clients in the different verticals, geographies or business lines

Top-Down

The top-down approach starts with the traditional aggregation scenario that considers the accumulation of losses across multiple organizations and sectors. In this case, some existing solutions put their effort into identifying key factors driving losses at a portfolio level and assessing critical scenarios or cyber disasters. One case is the identification of potential interconnectivity of cyber risks. For example, if numerous industrial companies in the same sector are using the same IT system, and something happens to that system that affects all companies in that industry, there is the potential for sizable claims.

With this approach, we avoid known problems associated with frequency and severity modeling due to the lack of data, the strong dynamics of the sector, and the potential diversity among clients. And we consider the number of clients that insurers require to have effective risk diversification and adequate premiums.

The top-down approach has significant limitations when it comes to large industrial cyber risk, for the reasons explained above, the we are overcoming by our dual bottom-up and top down analysis.

Why it Matters - Dual perspective, One Risk

At DeNexus, we are solving the cyber risk challenge shared between underwriters and industrial organizations alike by providing financial quantification and real-time mitigation paths to manage cyber risk that the market desperately needs, but does not have. And to enable the underwriting of industrial cyber risk at scale. The common challenge between the two stakeholders of asset owners on one side, and insurers on the other, has led to an over-dependency on cybersecurity tooling without a look into the cost impact of cyber risk events.

DeNexus has emerged as an orchestrating engine between cybersecurity and cyber risk impact by aggregating the data required to achieve resiliency in the wake of ever-changing cyber risks.

.png)